The underside of a bridge is a critical area for structural safety. For years, weak GNSS signals have made it a “hard nut to crack” in drone inspections. The electromagnetic shielding effect of steel–concrete structures causes multipath attenuation and signal jumps. When a drone enters the negatively curved space beneath a bridge, its navigation positioning accuracy (CEP) can degrade to meter-level errors—or even cause divergence in the flight controller’s attitude calculation. This severely limits inspection coverage of key areas such as the bridge deck underside and piers. The challenge has led many asset owners to doubt drones’ ability to operate autonomously in such environments, becoming the top obstacle to market adoption. By 2025, however, technological advances have enabled drones to operate stably in complex sub-bridge environments—thanks to breakthroughs in positioning without GNSS signals.

Single Navigation Technologies: Exploration and Limitations

Early drones relied solely on GPS navigation, which is prone to disruption and loss beneath bridges. Research teams explored various single navigation technologies, each with partial success but clear limitations:

-

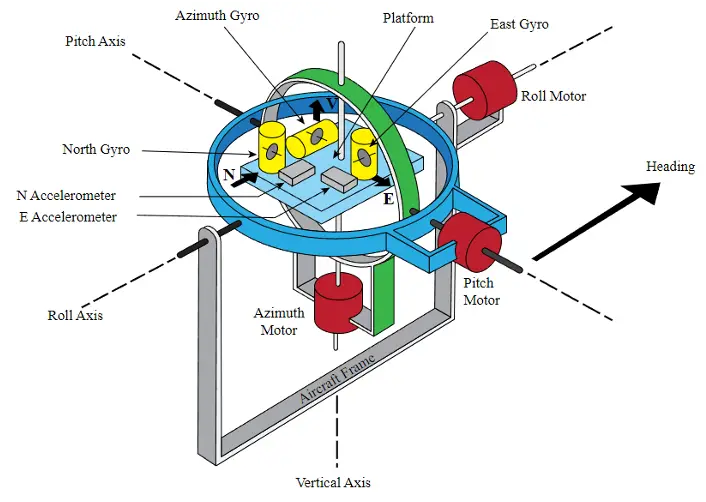

Inertial Navigation Systems (INS): Measures acceleration and angular velocity in real time, integrating data to estimate position in a bridge coordinate system. It works without GPS, maintaining positioning for a limited period during signal loss. However, errors accumulate over time, requiring frequent calibration to sustain high accuracy.

△Inertial Navigation System

-

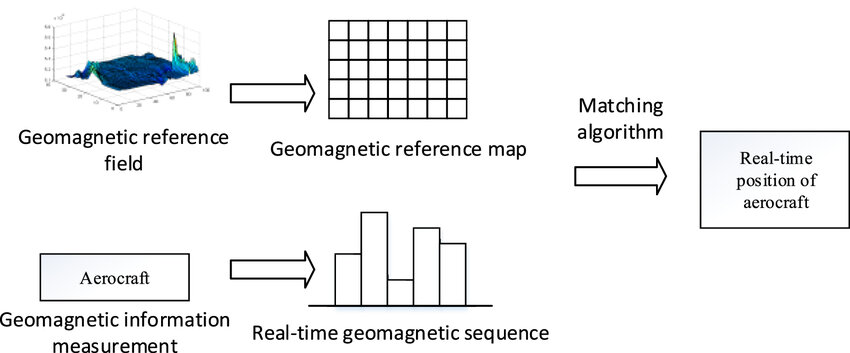

Geomagnetic Navigation: Uses Earth’s magnetic field signatures to determine position by comparing magnetic field readings with a pre-mapped database. But dense steel components under bridges distort the magnetic environment, greatly reducing accuracy and reliability.

-

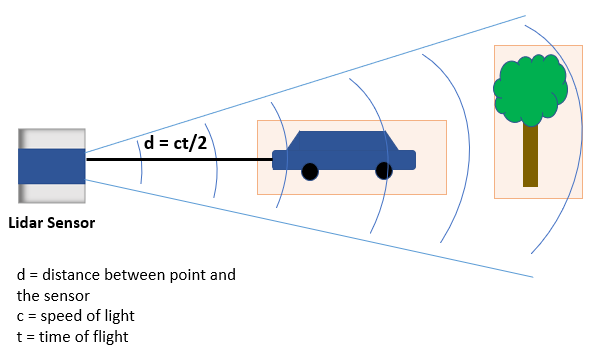

LiDAR Navigation: Emits laser pulses to measure reflections and build 3D environmental models for precise obstacle avoidance and path planning. Yet its range and resolution can be affected by environmental conditions, sometimes falling short of high-precision needs under complex bridge structures.

-

Visual Odometry: Like “visual memory” for drones, it calculates motion by tracking changes in feature points between consecutive images. Poor lighting under bridges can severely impair performance, and in tunnel-like conditions it may even fail entirely.

Multi-Sensor Fusion: The Core Breakthrough

No single navigation method meets the high fault-tolerance needed for all scenarios. Researchers developed a multi-source heterogeneous data fusion architecture that integrates the strengths of multiple technologies, delivering centimeter-level accuracy with 99.9% confidence.

In this architecture:

-

MEMS INS provides spatial reference stability for short-term position holding.

-

Geomagnetic fingerprinting offers interference-resistant auxiliary positioning in challenging electromagnetic conditions.

-

Solid-state LiDAR delivers high-precision 3D reconstruction of the bridge underside.

-

Visual SLAM continuously corrects position estimates in real time.

By applying deep learning-based multi-scale feature extraction and adaptive weighting, the system compensates for the weaknesses of any single technology.

Multi-sensor fusion SLAM is a representative application. It integrates visual cameras, LiDAR, and IMUs with simultaneous localization and mapping (SLAM) technology. Visual SLAM uses bridge surface textures or QR code markers for mapping-assisted localization (e.g., a QR-guided system introduced in 2021 showed excellent results). LiDAR SLAM scans bridge structures to generate 3D point clouds for precise obstacle avoidance and path planning (supported by hierarchical SLAM algorithms introduced in 2020). Sensor redundancy—combining barometers, ultrasonic sensors, and more—further enhances stability.

RIEBO’s Practical Application of the Fusion Solution

RIEBO’s high-precision inertial navigation system adopts multi-source data fusion, integrating a high-accuracy GNSS receiver, high-performance inertial sensors, and post-mission differential software.

When GNSS signals weaken or fail, the inertial sensors calculate position autonomously, keeping errors under 0.5 m for up to 10 minutes—ensuring stable flight and route tracking beneath bridges. Post-mission differential processing uses ground base station coordinates to recalibrate collected data, eliminating drift and delivering centimeter-level planar accuracy for 3D models and defect coordinates.

To counter electromagnetic interference from dense steel structures, the hardware employs shielding designs to ensure stable sensor data transmission and minimize positioning drift.

In one river-crossing bridge project, traditional drones repeatedly crashed into beams due to GNSS signal loss. With RIEBO’s system, the drone flew stably along preset routes inside a GNSS-denied box girder, capturing multiple high-resolution defect images. Inspection efficiency improved by 80% over traditional methods—without a single safety incident.

From “Dead Zones” to Fully Inspectable Areas

Thanks to breakthroughs in multi-sensor fusion, drone bridge inspections can now achieve millimeter-level precision even in GNSS blind spots. What were once “no-go zones” are now fully inspectable. These advancements boost inspection efficiency by 5–8× over manual methods, cut costs by 40–60%, and ensure robust safety monitoring of bridge undersides—safeguarding long-term structural health.